|

Vocapedia >

Technology > Computing, Computers

Science, Pioneers, Chips, Microprocessors,

File formats, Algorithms,

Data storage, Measurement units

Time Covers - The 50S

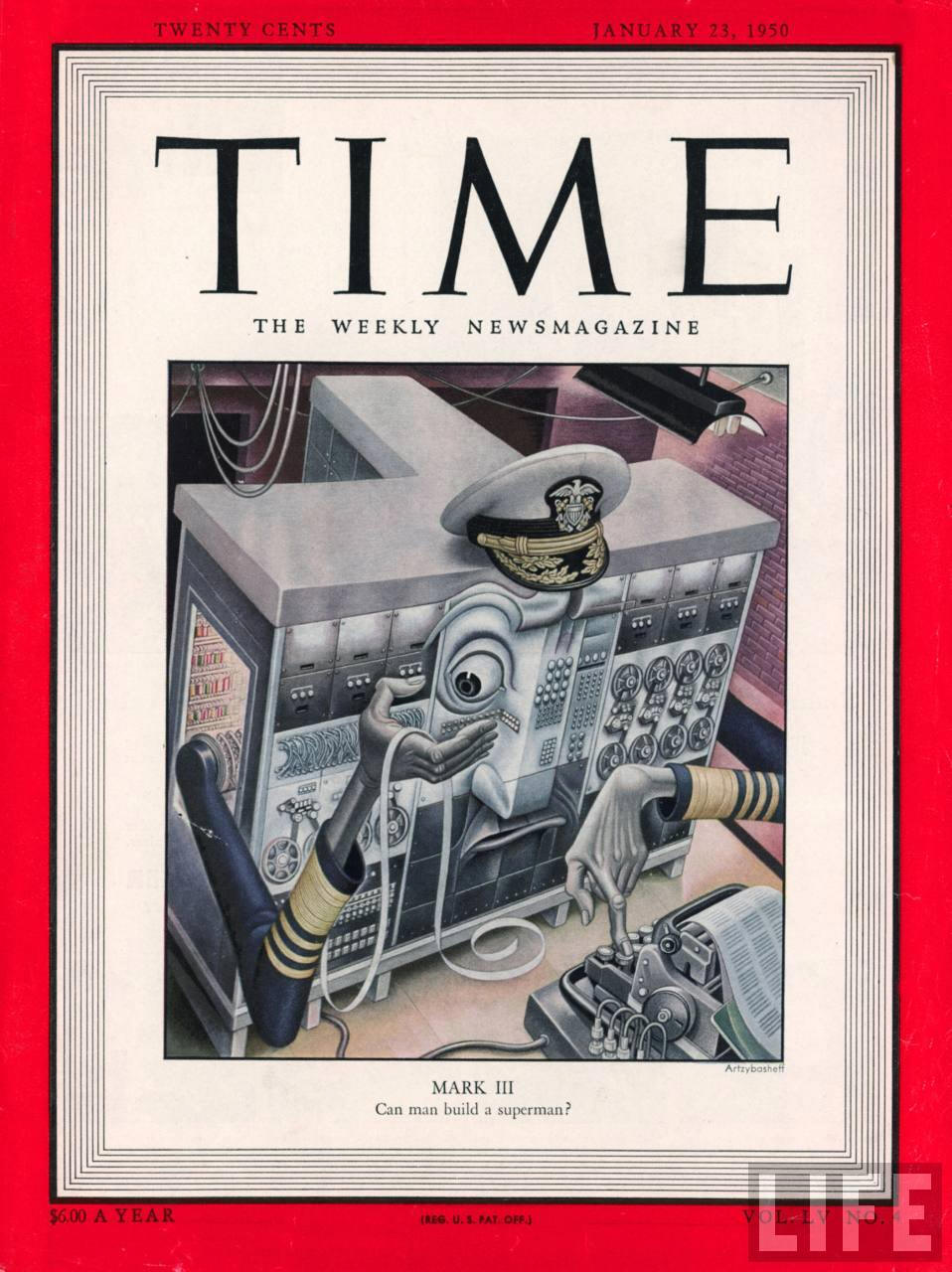

TIME cover 01-23-1950

caricature of the US Navy's Mark III computer.

Date taken: January 23, 1950

Photographer: Boris Artzybasheff

Life Image

http://images.google.com/hosted/life/ba1d3473b519ada3.html

silicon

a single piece of silicon

Silicon Valley USA

http://topics.nytimes.com/topics/news/national/

usstatesterritoriesandpossessions/california/

siliconvalley/index.html

https://www.nytimes.com/interactive/2017/10/13/

opinion/sunday/Silicon-Valley-Is-Not-Your-Friend.html

http://www.nytimes.com/2014/03/16/

magazine/silicon-valleys-youth-problem.html

http://www.nytimes.com/2013/11/27/

technology/in-silicon-valley-partying-like-its-1999-again.html

http://www.nytimes.com/2012/02/09/us/california-

housing-market-braces-for-facebook-millionaires.html

http://www.nytimes.com/2011/11/05/

technology/apple-woos-educators-with-trips-to-silicon-valley.html

http://dealbook.nytimes.com/2010/12/03/

a-silicon-bubble-shows-signs-of-reinflating/

chiplets USA

For more than 50 years,

designers of computer chips mainly used

one tactic to boost performance:

They shrank electronic components

to pack more power onto each piece of silicon.

Then more than a decade ago,

engineers at the chip maker

Advanced Micro Devices

began toying with a radical idea.

Instead of designing one big microprocessor

with vast numbers of tiny transistors,

they conceived of creating one from smaller chips

that would be packaged tightly together

to work like one electronic brain.

The concept,

sometimes called chiplets,

caught on in a big way

with AMD, Apple, Amazon, Tesla, IBM and Intel

introducing such products.

Chiplets rapidly gained traction

because smaller chips are cheaper to make,

while bundles of them

can top the performance of any single slice of silicon.

The strategy,

based on advanced packaging technology,

has since become an essential tool

to enabling progress in semiconductors.

And it represents one of the biggest shifts in years

for an industry that drives innovations in fields

like artificial intelligence, self-driving cars

and military hardware.

“Packaging is where the action is going to be,”

said Subramanian Iyer,

a professor of electrical and computer engineering

at the University of California, Los Angeles,

who helped pioneer the chiplet concept.

“It’s happening because there is actually no other way.”

https://www.nytimes.com/2023/05/11/

technology/us-chiplets-tech.html

Flying Microchips The Size Of A Sand Grain

Could Be Used For Population Surveillance

USA

https://www.npr.org/2021/09/23/

1040035430/flying-microchip-sand-grain-northwestern-winged

ultradense

computer chips USA

http://www.nytimes.com/2015/07/09/

technology/ibm-announces-computer-chips-more-powerful-than-any-in-existence.html

nanotube chip breakthrough USA

http://bits.blogs.nytimes.com/2012/10/28/

i-b-m-reports-nanotube-chip-breakthrough/

IBM Develops a New Chip That Functions Like a Brain

USA

http://www.nytimes.com/2014/08/08/

science/new-computer-chip-is-designed-to-work-like-the-brain.html

microprocessor USA

http://www.npr.org/2016/03/22/47

1389537/digital-pioneer-andrew-grove-led-intels-shift-from-chips-to-microprocessors

chip USA

http://www.npr.org/2016/03/22/

471389537/digital-pioneer-andrew-grove-led-intels-shift-from-chips-to-microprocessors

chip / semiconductor chips / processor

UK / USA

https://www.nytimes.com/topic/company/

intel-corporation

http://www.nytimes.com/2014/08/08/

science/new-computer-chip-is-designed-to-work-like-the-brain.html

http://www.nytimes.com/2010/08/31/

science/31compute.html

http://www.nytimes.com/2008/11/17/

technology/companies/17chip.html

http://www.theguardian.com/technology/2006/jun/29/

guardianweeklytechnologysection5

chip designer > ARM Holdings USA

https://www.nytimes.com/topic/company/arm-holdings-plc

http://www.nytimes.com/2010/09/20/

technology/20arm.html

chip maker USA

http://www.nytimes.com/2011/01/04/

technology/04chip.html

Julius Blank USA 1925-2011

mechanical engineer

who helped start

a computer chip company in the 1950s

that became a prototype for high-tech start-ups

and a training ground for a generation

of Silicon Valley entrepreneurs

http://www.nytimes.com/2011/09/23/

technology/julius-blank-who-built-first-chip-maker-dies-at-86.html

memory chip

chipset

component

superconductor

http://www.economist.com/science/displayStory.cfm?story_id=2724217

computing USA

http://www.nytimes.com/2009/09/07/

technology/07spinrad.html

computing engine

Intel USA

https://www.nytimes.com/topic/company/

intel-corporation

http://www.npr.org/2016/03/22/

471389537/digital-pioneer-andrew-grove-led-intels-shift-from-chips-to-microprocessors

http://www.nytimes.com/2013/04/15/

technology/intel-tries-to-find-a-foothold-beyond-pcs.html

processor

processing speed

performance

power

power-efficient

heat

circuitry

transistor

chip maker Texas Instruments Inc.

semiconductor

low-power semiconductors

semiconductor maker

nanotechnology

state of the art

USA

https://www.nytimes.com/column/state-of-the-art

data storage

http://www.wisegeek.org/what-is-data-storage.htm

bit

A bit is a single unit of data,

expressed as either

a "0" or a "1"

in binary code.

A string of eight bits

equals one byte.

Any character formed,

such as a letter of the alphabet,

a number, or a punctuation mark,

requires eight binary bits to describe it.

For example:

A = 01000001

B = 01000010

a = 01100001

b = 01100010

6 = 00110110

7 = 00110111

! = 00100001

@ = 01000000

http://www.wisegeek.org/what-is-mbps.htm

What is the Difference Between a Bit and a Byte?

A bit, short for binary digit,

is the smallest unit of measurement

used for information storage in computers.

A bit is represented

by a 1 or a 0 with a value of true or false,

sometimes expressed as on or off.

Eight bits form a single byte of information,

also known as an octet.

The difference between a bit and a byte is size,

or the amount of information stored.

http://www.wisegeek.org/what-is-the-difference-between-a-bit-and-a-byte.htm

1000 bits = 1 kilobit

1000 kilobits = 1 megabit

1000 megabits = 1 gigabit

http://www.wisegeek.com/what-a-gigabit.htm

computer memory

http://www.wisegeek.com/what-is-computer-memory.htm

Megabits per second (Mbps)

refers to data transfer speeds as measured in megabits (Mb).

This term is commonly used

in communications and data technology

to demonstrate the speed at which a transfer takes place.

A megabit is just over one million bits,

so "Mbps" indicates

the transfer of one million bits of data each second.

Data can be moved even faster than this,

measured by terms like gigabits per second (Gbps).

http://www.wisegeek.org/what-is-mbps.htm

megabyte

Megabytes (MBs) are collections of digital information.

The term commonly refers

to two different numbers of bytes,

where each byte contains eight bits.

The first definition of megabyte,

used mainly in the context of computer memory,

denotes 1 048 576, or 220 bytes.

The other definition,

used in most networking

and computer storage applications,

means 1 000 000, or 106 bytes.

Using either definition,

a megabyte is roughly

the file size of a 500-page e-book.

http://www.wisegeek.com/what-are-megabytes.htm

gigabit GB

A gigabit

is a unit of measurement used in computers,

equal to one billion bits of data.

A bit is the smallest unit of data.

It takes eight bits

to form or store a single character of text.

These 8-bit units are known as bytes.

Hence, the difference between a gigabit and a gigabyte

is that the latter is 8x greater, or eight billion bits.

http://www.wisegeek.com/what-a-gigabit.htm

gigabyte

A gigabyte

is a term that indicates a definite value of data quantity

with regards to storage capacity or content.

It refers to an amount of something,

usually data of some kind, often stored digitally.

A gigabyte typically refers to 1 billion bytes.

http://www.wisegeek.com/what-is-a-gigabyte.htm

terabyte

A terabyte (TB)

is a large allocation of data storage capacity

applied most often to hard disk drives.

Hard disk drives are essential to computer systems,

as they store the operating system, programs, files and data

necessary to make the computer work.

Depending on what type of storage is being measured,

it can be equal to either 1,000 gigabytes (GB) or 1,024 GB;

disk storage is usually measured as the first,

while processor storage as the second.

In the late 1980s,

the average home computer system

had a single hard drive

with a capacity of about 20 megabytes (MB).

By the mid 1990s,

average capacity increased to about 80 MBs.

Just a few years later,

operating systems alone required more room than this,

while several hundred megabytes

represented an average storage capacity.

As of 2005,

computer buyers think in terms of hundreds of gigabytes,

and this is already giving way to even greater storage.

http://www.wisegeek.org/what-is-a-terabyte.htm

Petabyte

In the world of ever-growing data capacity,

a petabyte represents the frontier just ahead of the terabyte,

which itself runs just ahead of the gigabyte.

In other words, 1,024 gigabytes is one terabyte,

and 1,024 terabytes is one petabyte.

To put this in perspective,

a petabyte is about one million gigabytes (1,048,576).

http://www.wisegeek.com/what-is-a-petabyte.htm

file format > GIF - created in 1987

The compressed format of the GIF

allowed slow modem connections of the 1980s

to transfer images more efficiently.

The animation feature was added

in an updated version of the GIF file format.

https://www.npr.org/2022/03/23/

1088410133/gif-creator-dead-steve-wilhite-kathaleen-wife-interview

develop code

USA

http://www.nytimes.com/2013/01/13/

technology/aaron-swartz-internet-activist-dies-at-26.html

algorithm UK / USA

http://www.theguardian.com/commentisfree/video/2016/mar/16/

artificial-intelligence-we-should-be-more-afraid-of-computers-than-we-are-video

http://www.npr.org/sections/alltechconsidered/2015/06/07/

412481743/what-makes-algorithms-go-awry

project developer

gesture-recognition technology

http://www.nytimes.com/2010/10/30/

technology/30chip.html

hardware

computing

computing pioneer > Steven J. Wallach

USA

http://www.nytimes.com/2008/11/17/

technology/business-computing/17machine.html

computer

computer science USA

http://www.nytimes.com/2009/09/07/

technology/07spinrad.html

personal computer PC

https://www.nytimes.com/2019/07/12/

science/fernando-corbato-dead.html

http://www.nytimes.com/2012/03/06/

technology/as-new-ipad-debut-nears-some-see-decline-of-pcs.html

http://www.nytimes.com/2010/04/03/

business/03roberts.html

http://www.theguardian.com/commentisfree/2006/jul/29/

comment.mainsection3

personal computers powered by artificial intelligence

USA

https://www.nytimes.com/2024/06/23/

technology/personaltech/ai-phones-computers-privacy.html

PC tuneup USA

http://www.nytimes.com/2010/10/28/

technology/personaltech/28basics.html

computer UK

http://www.theguardian.com/commentisfree/video/2016/mar/16/

artificial-intelligence-we-should-be-more-afraid-of-computers-than-we-are-video

computer USA

http://www.nytimes.com/2010/10/28/

technology/personaltech/28basics.html

supercomputer UK / USA

https://www.npr.org/sections/health-shots/2020/03/24/

820381506/supercomputers-recruited-to-hunt-for-clues-to-a-covid-19-treatment

http://www.npr.org/sections/thetwo-way/2015/07/30/

427727355/obama-orders-development-of-supercomputer-to-rival-chinas-milky-way

http://www.guardian.co.uk/technology/shortcuts/2013/jan/11/

ibm-watson-supercomputer-cant-talk-slang

http://www.nytimes.com/2011/04/26/

science/26planetarium.html

quantum computer

performing complex calculations

thousands of times faster

than existing

supercomputers UK / USA

https://www.npr.org/2021/03/25/

981315128/is-the-future-quantum

https://www.nytimes.com/2019/01/08/

obituaries/roy-j-glauber-dead.html

http://bits.blogs.nytimes.com/2013/05/16/

google-buys-a-quantum-computer/

http://www.guardian.co.uk/science/2012/may/06/

quantum-computing-physics-jeff-forshaw

computerized surveillance systems

http://www.nytimes.com/2011/01/02/

science/02see.html

MITS Altair, the first inexpensive general-purpose microcomputer

http://www.nytimes.com/2010/04/03/

business/03roberts.html

ingestible computers

and minuscule sensors stuffed inside pills

http://bits.blogs.nytimes.com/2013/06/23/

disruptions-medicine-that-monitors-you/

The world's first multi-tasking computer - video

We take the ability to multi-task

on our computers for granted,

but it all started

with the Pilot Ace Computer

and the genius

of mathematician

Alan Turing

http://www.guardian.co.uk/science/video/2011/mar/01/

pilot-ace-computer-alan-turing

Alan Turing's Pilot Ace computer UK 12 april 2013

Built in the 1950s

and one of the Science Museum's 20th century icons,

The Pilot Ace "automatic computing engine"

was the world's first general purpose

computer

– and for a while was the fastest computer in the world.

We now take the ability to carry out

a range of tasks on our computers for

granted,

but it all started with the principles developed

by mathematician Alan Turing in

the 1930s

and his design for the Ace.

In this film, Professor Nick Braithwaite

of the Open

University

discusses its significance with Tilly Blyth,

curator of Computing and

Information

at the Science Museum

http://www.guardian.co.uk/science/video/2013/apr/12/

alan-turing-pilot-ace-computer-video

wall computers

http://www.theguardian.com/technology/2006/nov/12

/news.microsoft

surface computing

IBM PC UK

http://www.theguardian.com/technology/2006/aug/06/

billgates.microsoft

the IBM PC 5150 computer

construction of the ENIAC machine

at the

University of Pennsylvania in 1943 UK

http://www.guardian.co.uk/technology/2012/feb/26/

first-computers-john-von-neumann

ECToR, the UK's fastest machine

2008 UK

http://www.guardian.co.uk/technology/2008/jan/02/

computing.climatechange

computer services company

laptop / notebook

http://www.nytimes.com/2012/09/10/

technology/william-moggridge-laptop-pioneer-dies-at-69.html

http://www.guardian.co.uk/technology/2012/jun/11/

apple-ios-wwdc-google-maps

http://www.guardian.co.uk/money/2009/jun/16/

buying-a-laptop-saving-money

widescreen wireless notebook

James Loton Flanagan

1925-2015

pioneer in the field

of acoustics,

envisioning and

providing

the technical foundation

for speech recognition,

teleconferencing, MP3

music files

and the more efficient digital transmission

of human conversation

http://www.nytimes.com/2015/08/31/

business/james-l-flanagan-acoustical-pioneer-dies-at-89.html

http://www.nytimes.com/2015/08/31/

business/james-l-flanagan-acoustical-pioneer-dies-at-89.html

Andrew Francis

Kopischiansky 1919-2014

designer of an early

portable computer, the Kaypro II,

that became a smash

hit in the early 1980s

before his company

fell into bankruptcy in the ’90s

as the computer

industry leapfrogged ahead of him

(...)

For a time, Mr. Kay’s

company, Kaypro,

was the world’s

largest portable computer maker,

ranked fourth in the

PC industry over all behind IBM,

Apple Computer and

RadioShack.

http://www.nytimes.com/2014/09/06/business/andrew-kay-pioneer-in-computing-dies-at-95.html

http://www.nytimes.com/2014/09/06/

business/andrew-kay-pioneer-in-computing-dies-at-95.html

Jack Tramiel (born Jacek Trzmiel in Lodz,

Poland) USA

1928-2012

hard-charging,

cigar-chomping tycoon

whose inexpensive,

immensely popular Commodore computers

helped ignite the personal computer industry

the way Henry Ford’s Model T

kick-started the mass production of automobiles

http://www.nytimes.com/2012/04/11/

technology/jack-tramiel-a-pioneer-in-computers-dies-at-83.html

Kenneth Harry Olsen USA

1926-2011

Ken Olsen helped reshape the computer industry

as a founder of the

Digital Equipment Corporation,

at one time the world’s second-largest computer company

http://www.nytimes.com/2011/02/08/

technology/business-computing/08olsen.html

robot

http://www.nytimes.com/2010/09/05/

science/05robots.html

robotic

http://www.nytimes.com/2010/09/05/

science/05robots.html

computer scientists

software engineers

Computers Make Strides in Recognizing Speech

June 2010

http://www.nytimes.com/2010/06/25/

science/25voice.html

gesture-recognition technology

http://www.nytimes.com/2010/10/30/

technology/30chip.html

Computers

Date taken: 1961

Photographer: Walter Sanders

Life Images

Corpus of news articles

Technology > Computing, Computers

Science, Pioneers, Chips, Microprocessors,

Data storage, Measurement units

John McCarthy, 84, Dies;

Computer Design Pioneer

October 25, 2011

The New York Times

By JOHN MARKOFF

John McCarthy, a computer scientist who helped design the foundation of

today’s Internet-based computing and who is widely credited with coining the

term for a frontier of research he helped pioneer, Artificial Intelligence, or

A.I., died on Monday at his home in Stanford, Calif. He was 84.

The cause was complications of heart disease, his daughter Sarah McCarthy said.

Dr. McCarthy’s career followed the arc of modern computing. Trained as a

mathematician, he was responsible for seminal advances in the field and was

often called the father of computer time-sharing, a major development of the

1960s that enabled many people and organizations to draw simultaneously from a

single computer source, like a mainframe, without having to own one.

By lowering costs, it allowed more people to use computers and laid the

groundwork for the interactive computing of today.

Though he did not foresee the rise of the personal computer, Dr. McCarthy was

prophetic in describing the implications of other technological advances decades

before they gained currency.

“In the early 1970s, he presented a paper in France on buying and selling by

computer, what is now called electronic commerce,” said Whitfield Diffie, an

Internet security expert who worked as a researcher for Dr. McCarthy at the

Stanford Artificial Intelligence Laboratory.

And in the study of artificial intelligence, “no one is more influential than

John,” Mr. Diffie said.

While teaching mathematics at Dartmouth in 1956, Dr. McCarthy was the principal

organizer of the first Dartmouth Conference on Artificial Intelligence.

The idea of simulating human intelligence had been discussed for decades, but

the term “artificial intelligence” — originally used to help raise funds to

support the conference — stuck.

In 1958, Dr. McCarthy moved to the Massachusetts Institute of Technology, where,

with Marvin Minsky, he founded the Artificial Intelligence Laboratory. It was at

M.I.T. that he began working on what he called List Processing Language, or

Lisp, a computer language that became the standard tool for artificial

intelligence research and design.

Around the same time he came up with a technique called garbage collection, in

which pieces of computer code that are not needed by a running computation are

automatically removed from the computer’s random access memory.

He developed the technique in 1959 and added it to Lisp. That technique is now

routinely used in Java and other programming languages.

His M.I.T. work also led to fundamental advances in software and operating

systems. In one, he was instrumental in developing the first time-sharing system

for mainframe computers.

The power of that invention would come to shape Dr. McCarthy’s worldview to such

an extent that when the first personal computers emerged with local computing

and storage in the 1970s, he belittled them as toys.

Rather, he predicted, wrongly, that in the future everyone would have a

relatively simple and inexpensive computer terminal in the home linked to a

shared, centralized mainframe and use it as an electronic portal to the worlds

of commerce and news and entertainment media.

Dr. McCarthy, who taught briefly at Stanford in the early 1950s, returned there

in 1962 and in 1964 became the founding director of the Stanford Artificial

Intelligence Laboratory, or SAIL. Its optimistic, space-age goal, with financial

backing from the Pentagon, was to create a working artificial intelligence

system within a decade.

Years later he developed a healthy respect for the challenge, saying that

creating a “thinking machine” would require “1.8 Einsteins and one-tenth the

resources of the Manhattan Project.”

Artificial intelligence is still thought to be far in the future, though

tremendous progress has been made in systems that mimic many human skills,

including vision, listening, reasoning and, in robotics, the movements of limbs.

From the mid-’60s to the mid-’70s, the Stanford lab played a vital role in

creating some of these technologies, including robotics and machine-vision

natural language.

In 1972, the laboratory drew national attention when Stewart Brand, the founder

of The Whole Earth Catalog, wrote about it in Rolling Stone magazine under the

headline “SPACEWAR: Fanatic Life and Symbolic Death Among the Computer Bums.”

The article evoked the esprit de corps of a group of researchers who had been

freed to create their own virtual worlds, foreshadowing the emergence of

cyberspace. “Ready or not, computers are coming to the people,” Mr. Brand wrote.

Dr. McCarthy had begun inviting the Homebrew Computer Club, a Silicon Valley

hobbyist group, to meet at the Stanford lab. Among its growing membership were

Steven P. Jobs and Steven Wozniak, who would go on to found Apple. Mr. Wozniak

designed his first personal computer prototype, the Apple 1, to share with his

Homebrew friends.

But Dr. McCarthy still cast a jaundiced eye on personal computing. In the second

Homebrew newsletter, he suggested the formation of a “Bay Area Home Terminal

Club,” to provide computer access on a shared Digital Equipment computer. He

thought a user fee of $75 a month would be reasonable.

Though Dr. McCarthy would initially miss the significance of the PC, his early

thinking on electronic commerce would influence Mr. Diffie at the Stanford lab.

Drawing on those ideas, Mr. Diffie began thinking about what would replace the

paper personal check in an all-electronic world.

He and two other researchers went on to develop the basic idea of public key

cryptography, which is now the basis of all modern electronic banking and

commerce, providing secure interaction between a consumer and a business.

A chess enthusiast, Dr. McCarthy had begun working on chess-playing computer

programs in the 1950s at Dartmouth. Shortly after joining the Stanford lab, he

engaged a group of Soviet computer scientists in an intercontinental chess match

after he discovered they had a chess-playing computer. Played by telegraph, the

match consisted of four games and lasted almost a year. The Soviet scientists

won.

John McCarthy was born on Sept. 4, 1927, into a politically engaged family in

Boston. His father, John Patrick McCarthy, was an Irish immigrant and a labor

organizer.

His mother, the former Ida Glatt, a Lithuanian Jewish immigrant, was active in

the suffrage movement. Both parents were members of the Communist Party. The

family later moved to Los Angeles in part because of John’s respiratory

problems.

He entered the California Institute of Technology in 1944 and went on to

graduate studies at Princeton, where he was a colleague of John Forbes Nash Jr.,

the Nobel Prize-winning economist and subject of Sylvia Nasar’s book “A

Beautiful Mind,” which was adapted into a movie.

At Princeton, in 1949, he briefly joined the local Communist Party cell, which

had two other members: a cleaning woman and a gardener, he told an interviewer.

But he quit the party shortly afterward.

In the ’60s, as the Vietnam War escalated, his politics took a conservative turn

as he grew disenchanted with leftist politics.

In 1971 Dr. McCarthy received the Turing Award, the most prestigious given by

the Association of Computing Machinery, for his work in artificial intelligence.

He was awarded the Kyoto Prize in 1988, the National Medal of Science in 1991

and the Benjamin Franklin Medal in 2003.

Dr. McCarthy was married three times. His second wife, Vera Watson, a member of

the American Women’s Himalayan Expedition, died in a climbing accident on

Annapurna in 1978.

Besides his daughter Sarah, of Nevada City, Calif., he is survived by his wife,

Carolyn Talcott, of Stanford; another daughter, Susan McCarthy, of San

Francisco; and a son, Timothy, of Stanford.

He remained an independent thinker throughout his life. Some years ago, one of

his daughters presented him with a license plate bearing one of his favorite

aphorisms: “Do the arithmetic or be doomed to talk nonsense.”

John McCarthy, 84, Dies;

Computer Design Pioneer,

NYT,

25.10.2011,

https://www.nytimes.com/2011/10/26/

science/26mccarthy.html

Computer Wins on ‘Jeopardy!’:

Trivial, It’s Not

February 16, 2011

The New York Times

By JOHN MARKOFF

YORKTOWN HEIGHTS, N.Y. — In the end, the humans on “Jeopardy!” surrendered

meekly.

Facing certain defeat at the hands of a room-size I.B.M. computer on Wednesday

evening, Ken Jennings, famous for winning 74 games in a row on the TV quiz show,

acknowledged the obvious. “I, for one, welcome our new computer overlords,” he

wrote on his video screen, borrowing a line from a “Simpsons” episode.

From now on, if the answer is “the computer champion on “Jeopardy!,” the

question will be, “What is Watson?”

For I.B.M., the showdown was not merely a well-publicized stunt and a $1 million

prize, but proof that the company has taken a big step toward a world in which

intelligent machines will understand and respond to humans, and perhaps

inevitably, replace some of them.

Watson, specifically, is a “question answering machine” of a type that

artificial intelligence researchers have struggled with for decades — a computer

akin to the one on “Star Trek” that can understand questions posed in natural

language and answer them.

Watson showed itself to be imperfect, but researchers at I.B.M. and other

companies are already developing uses for Watson’s technologies that could have

significant impact on the way doctors practice and consumers buy products.

“Cast your mind back 20 years and who would have thought this was possible?”

said Edward Feigenbaum, a Stanford University computer scientist and a pioneer

in the field.

In its “Jeopardy!” project, I.B.M. researchers were tackling a game that

requires not only encyclopedic recall, but the ability to untangle convoluted

and often opaque statements, a modicum of luck, and quick, strategic button

pressing.

The contest, which was taped in January here at the company’s T. J. Watson

Research Laboratory before an audience of I.B.M. executives and company clients,

played out in three televised episodes concluding Wednesday. At the end of the

first day, Watson was in a tie with Brad Rutter, another ace human player, at

$5,000 each, with Mr. Jennings trailing with $2,000.

But on the second day, Watson went on a tear. By night’s end, Watson had a

commanding lead with a total of $35,734, compared with Mr. Rutter’s $10,400 and

Mr. Jennings’ $4,800.

But victory was not cemented until late in the third match, when Watson was in

Nonfiction. “Same category for $1,200” it said in a manufactured tenor, and

lucked into a Daily Double. Mr. Jennings grimaced.

Even later in the match, however, had Mr. Jennings won another key Daily Double

it might have come down to Final Jeopardy, I.B.M. researchers acknowledged.

The final tally was $77,147 to Mr. Jennings’ $24,000 and Mr. Rutter’s $21,600.

More than anything, the contest was a vindication for the academic field of

computer science, which began with great promise in the 1960s with the vision of

creating a thinking machine and which became the laughingstock of Silicon Valley

in the 1980s, when a series of heavily funded start-up companies went bankrupt.

Despite its intellectual prowess, Watson was by no means omniscient. On Tuesday

evening during Final Jeopardy, the category was U.S. Cities and the clue was:

“Its largest airport is named for a World War II hero; its second largest for a

World War II battle.”

Watson drew guffaws from many in the television audience when it responded “What

is Toronto?????”

The string of question marks indicated that the system had very low confidence

in its response, I.B.M. researchers said, but because it was Final Jeopardy, it

was forced to give a response. The machine did not suffer much damage. It had

wagered just $947 on its result.

“We failed to deeply understand what was going on there,” said David Ferrucci,

an I.B.M. researcher who led the development of Watson. “The reality is that

there’s lots of data where the title is U.S. cities and the answers are

countries, European cities, people, mayors. Even though it says U.S. cities, we

had very little confidence that that’s the distinguishing feature.”

The researchers also acknowledged that the machine had benefited from the

“buzzer factor.”

Both Mr. Jennings and Mr. Rutter are accomplished at anticipating the light that

signals it is possible to “buzz in,” and can sometimes get in with virtually

zero lag time. The danger is to buzz too early, in which case the contestant is

penalized and “locked out” for roughly a quarter of a second.

Watson, on the other hand, does not anticipate the light, but has a weighted

scheme that allows it, when it is highly confident, to buzz in as quickly as 10

milliseconds, making it very hard for humans to beat. When it was less

confident, it buzzed more slowly. In the second round, Watson beat the others to

the buzzer in 24 out of 30 Double Jeopardy questions.

“It sort of wants to get beaten when it doesn’t have high confidence,” Dr.

Ferrucci said. “It doesn’t want to look stupid.”

Both human players said that Watson’s button pushing skill was not necessarily

an unfair advantage. “I beat Watson a couple of times,” Mr. Rutter said.

When Watson did buzz in, it made the most of it. Showing the ability to parse

language, it responded to, “A recent best seller by Muriel Barbery is called

‘This of the Hedgehog,’ ” with “What is Elegance?”

It showed its facility with medical diagnosis. With the answer: “You just need a

nap. You don’t have this sleep disorder that can make sufferers nod off while

standing up,” Watson replied, “What is narcolepsy?”

The coup de grâce came with the answer, “William Wilkenson’s ‘An Account of the

Principalities of Wallachia and Moldavia’ inspired this author’s most famous

novel.” Mr. Jennings wrote, correctly, Bram Stoker, but realized he could not

catch up with Watson’s winnings and wrote out his surrender.

Both players took the contest and its outcome philosophically.

“I had a great time and I would do it again in a heartbeat,” said Mr. Jennings.

“It’s not about the results; this is about being part of the future.”

For I.B.M., the future will happen very quickly, company executives said. On

Thursday it plans to announce that it will collaborate with Columbia University

and the University of Maryland to create a physician’s assistant service that

will allow doctors to query a cybernetic assistant. The company also plans to

work with Nuance Communications Inc. to add voice recognition to the physician’s

assistant, possibly making the service available in as little as 18 months.

“I have been in medical education for 40 years and we’re still a very

memory-based curriculum,” said Dr. Herbert Chase, a professor of clinical

medicine at Columbia University who is working with I.B.M. on the physician’s

assistant. “The power of Watson- like tools will cause us to reconsider what it

is we want students to do.”

I.B.M. executives also said they are in discussions with a major consumer

electronics retailer to develop a version of Watson, named after I.B.M.’s

founder, Thomas J. Watson, that would be able to interact with consumers on a

variety of subjects like buying decisions and technical support.

Dr. Ferrucci sees none of the fears that have been expressed by theorists and

science fiction writers about the potential of computers to usurp humans.

“People ask me if this is HAL,” he said, referring to the computer in “2001: A

Space Odyssey.” “HAL’s not the focus, the focus is on the computer on ‘Star

Trek,’ where you have this intelligent information seek dialog, where you can

ask follow-up questions and the computer can look at all the evidence and tries

to ask follow-up questions. That’s very cool.”

Computer Wins on

‘Jeopardy!’: Trivial, It’s Not, NYT, 16.2.2011,

http://www.nytimes.com/2011/02/17/science/17jeopardy-watson.html

Ken Olsen,

Who Built DEC Into a Power,

Dies at 84

February 7, 2011

The New York Times

By GLENN RIFKIN

Ken Olsen, who helped reshape the computer industry as a founder of the

Digital Equipment Corporation, at one time the world’s second-largest computer

company, died on Sunday. He was 84.

His family announced the death but declined to provide further details. He had

recently lived with a daughter in Indiana and had been a longtime resident of

Lincoln, Mass.

Mr. Olsen, who was proclaimed “America’s most successful entrepreneur” by

Fortune magazine in 1986, built Digital on $70,000 in seed money, founding it

with a partner in 1957 in the small Boston suburb of Maynard, Mass. With Mr.

Olsen as its chief executive, it grew to employ more than 120,000 people at

operations in more than 95 countries, surpassed in size only by I.B.M.

At its peak, in the late 1980s, Digital had $14 billion in sales and ranked

among the most profitable companies in the nation.

But its fortunes soon declined after Digital began missing out on some critical

market shifts, particularly toward the personal computer. Mr. Olsen was

criticized as autocratic and resistant to new trends. “The personal computer

will fall flat on its face in business,” he said at one point. And in July 1992,

the company’s board forced him to resign.

Six years later, Digital, or DEC, as the company was known, was acquired by the

Compaq Computer Corporation for $9.6 billion.

But for 35 years the enigmatic Mr. Olsen oversaw an expanding technology giant

that produced some of the computer industry’s breakthrough ideas.

In a tribute to him in 2006, Bill Gates, the Microsoft co-founder, called Mr.

Olsen “one of the true pioneers of computing,” adding, “He was also a major

influence on my life.”

Mr. Gates traced his interest in software to his first use of a DEC computer as

a 13-year-old. He and Microsoft’s other founder, Paul Allen, created their first

personal computer software on a DEC PDP-10 computer.

In the 1960s, Digital built small, powerful and elegantly designed

“minicomputers,” which formed the basis of a lucrative new segment of the

computer marketplace. Though hardly “mini” by today’s standards, the computer

became a favorite alternative to the giant, multimillion-dollar mainframe

computers sold by I.B.M. to large corporate customers. The minicomputer found a

market in research laboratories, engineering companies and other professions

requiring heavy computer use.

In time, several minicomputer companies sprang up around Digital and thrived,

forming the foundation of the Route 128 technology corridor near Boston.

Digital also spawned a generation of computing talent, lured by an open

corporate culture that fostered a free flow of ideas. A frequently rumpled

outdoorsman who preferred flannel shirts to business suits, Mr. Olsen, a brawny

man with piercing blue eyes, shunned publicity and ran the company as a large,

sometimes contentious family.

Many within the industry assumed that Digital, with its stellar engineering

staff, would be the logical company to usher in the age of personal computers,

but Mr. Olsen was openly skeptical of the desktop machines. He thought of them

as “toys” used for playing video games.

Still, most people in the industry say Mr. Olsen’s legacy is secure. “Ken Olsen

is the father of the second generation of computing,” said George Colony, who is

chief executive of Forrester Research and a longtime industry watcher, “and that

makes him one of the major figures in the history of this business.”

Kenneth Harry Olsen was born in Bridgeport, Conn., on Feb. 20, 1926, and grew up

with his three siblings in nearby Stratford. His parents, Oswald and Elizabeth

Svea Olsen, were children of Norwegian immigrants.

Mr. Olsen and his younger brother Stan lived their passion for electronics in

the basement of their Stratford home, inventing gadgets and repairing broken

radios. After a stint in the Navy at the end of World War II, Mr. Olsen headed

to the Massachusetts Institute of Technology, where he received bachelor’s and

master’s degrees in electrical engineering. He took a job at M.I.T.’s new

Lincoln Laboratory in 1950 and worked under Jay Forrester, who was doing

pioneering work in the nascent days of interactive computing.

In 1957, itching to leave academia, Mr. Olsen, then 31, recruited a Lincoln Lab

colleague, Harlan Anderson, to help him start a company. For financing they

turned to Georges F. Doriot, a renowned Harvard Business School professor and

venture capitalist. According to Mr. Colony, Digital became the first successful

venture-backed company in the computer industry. Mr. Anderson left the company

shortly afterward, leaving Mr. Olsen to put his stamp on it for more than three

decades.

In Digital’s often confusing management structure, Mr. Olsen was the dominant

figure who hired smart people, gave them responsibility and expected them “to

perform as adults,” said Edgar Schein, who taught organizational behavior at

M.I.T. and consulted with Mr. Olsen for 25 years. “Lo and behold,” he said,

“they performed magnificently.”

One crucial employee was Gordon Bell, a DEC vice president and the technical

brains behind many of Digital’s most successful machines. “All the alumni think

of Digital fondly and remember it as a great place to work,” said Mr. Bell, who

went on to become a principal researcher at Microsoft.

After he left Digital, Mr. Olsen began another start-up, Advanced Modular

Solutions, but it eventually failed. In retirement, he helped found the Ken

Olsen Science Center at Gordon College, a Christian school in Wenham, Mass.,

where an archive of his papers and Digital’s history is housed. His family

announced his death through the college.

Mr. Olsen’s wife of 59 years, Eeva-Liisa Aulikki Olsen, died in March 2009. A

son, Glenn, also died. Mr. Olsen’s survivors include a daughter, Ava Memmen,

another son, James; his brother Stan; and five grandchildren.

Ken Olsen, Who Built DEC

Into a Power, Dies at 84, NYT, 7.2.2011,

http://www.nytimes.com/2011/02/08/technology/

business-computing/08olsen.html

Advances Offer Path

to Shrink Computer Chips Again

August 30, 2010

The New York Times

By JOHN MARKOFF

Scientists at Rice University and Hewlett-Packard are reporting this week

that they can overcome a fundamental barrier to the continued rapid

miniaturization of computer memory that has been the basis for the consumer

electronics revolution.

In recent years the limits of physics and finance faced by chip makers had

loomed so large that experts feared a slowdown in the pace of miniaturization

that would act like a brake on the ability to pack ever more power into ever

smaller devices like laptops, smartphones and digital cameras.

But the new announcements, along with competing technologies being pursued by

companies like IBM and Intel, offer hope that the brake will not be applied any

time soon.

In one of the two new developments, Rice researchers are reporting in Nano

Letters, a journal of the American Chemical Society, that they have succeeded in

building reliable small digital switches — an essential part of computer memory

— that could shrink to a significantly smaller scale than is possible using

conventional methods.

More important, the advance is based on silicon oxide, one of the basic building

blocks of today’s chip industry, thus easing a move toward commercialization.

The scientists said that PrivaTran, a Texas startup company, has made

experimental chips using the technique that can store and retrieve information.

These chips store only 1,000 bits of data, but if the new technology fulfills

the promise its inventors see, single chips that store as much as today’s

highest capacity disk drives could be possible in five years. The new method

involves filaments as thin as five nanometers in width — thinner than what the

industry hopes to achieve by the end of the decade using standard techniques.

The initial discovery was made by Jun Yao, a graduate researcher at Rice. Mr.

Yao said he stumbled on the switch by accident.

Separately, H.P. is to announce on Tuesday that it will enter into a commercial

partnership with a major semiconductor company to produce a related technology

that also has the potential of pushing computer data storage to astronomical

densities in the next decade. H.P. and the Rice scientists are making what are

called memristors, or memory resistors, switches that retain information without

a source of power.

“There are a lot of new technologies pawing for attention,” said Richard

Doherty, president of the Envisioneering Group, a consumer electronics market

research company in Seaford, N.Y. “When you get down to these scales, you’re

talking about the ability to store hundreds of movies on a single chip.”

The announcements are significant in part because they indicate that the chip

industry may find a way to preserve the validity of Moore’s Law. Formulated in

1965 by Gordon Moore, a co-founder of Intel, the law is an observation that the

industry has the ability to roughly double the number of transistors that can be

printed on a wafer of silicon every 18 months.

That has been the basis for vast improvements in technological and economic

capacities in the past four and a half decades. But industry consensus had

shifted in recent years to a widespread belief that the end of physical progress

in shrinking the size modern semiconductors was imminent. Chip makers are now

confronted by such severe physical and financial challenges that they are

spending $4 billion or more for each new advanced chip-making factory.

I.B.M., Intel and other companies are already pursuing a competing technology

called phase-change memory, which uses heat to transform a glassy material from

an amorphous state to a crystalline one and back.

Phase-change memory has been the most promising technology for so-called flash

chips, which retain information after power is switched off.

The flash memory industry has used a number of approaches to keep up with

Moore’s law without having a new technology. But it is as if the industry has

been speeding toward a wall, without a way to get over it.

To keep up speed on the way to the wall, the industry has begun building

three-dimensional chips by stacking circuits on top of one another to increase

densities. It has also found ways to get single transistors to store more

information. But these methods would not be enough in the long run.

The new technology being pursued by H.P. and Rice is thought to be a dark horse

by industry powerhouses like Intel, I.B.M., Numonyx and Samsung. Researchers at

those competing companies said that the phenomenon exploited by the Rice

scientists had been seen in the literature as early as the 1960s.

“This is something that I.B.M. studied before and which is still in the research

stage,” said Charles Lam, an I.B.M. specialist in semiconductor memories.

H.P. has for several years been making claims that its memristor technology can

compete with traditional transistors, but the company will report this week that

it is now more confident that its technology can compete commercially in the

future.

In contrast, the Rice advance must still be proved. Acknowledging that

researchers must overcome skepticism because silicon oxide has been known as an

insulator by the industry until now, Jim Tour, a nanomaterials specialist at

Rice said he believed the industry would have to look seriously at the research

team’s new approach.

“It’s a hard sell, because at first it’s obvious it won’t work,” he said. “But

my hope is that this is so simple they will have to put it in their portfolio to

explore.”

Advances Offer Path to

Shrink Computer Chips Again, NY, 30.8.2010,

http://www.nytimes.com/2010/08/31/science/31compute.html

Max Palevsky,

a Pioneer in Computers,

Dies at 85

May 6, 2010

The New York Times

By WILLIAM GRIMES

Max Palevsky, a pioneer in the computer industry and a founder of the

computer-chip giant Intel who used his fortune to back Democratic presidential

candidates and to amass an important collection of American Arts and Crafts

furniture, died on Wednesday at his home in Beverly Hills, Calif. He was 85.

The cause was heart failure, said Angela Kaye, his assistant.

Mr. Palevsky became intrigued by computers in the early 1950s when he heard the

mathematician John von Neumann lecture at the California Institute of Technology

on the potential for computer technology. Trained in symbolic logic and

mathematics, Mr. Palevsky was studying and teaching philosophy at the University

of California, Los Angeles, but followed his hunch and left the academy.

After working on logic design for the Bendix Corporation’s first computer, in

1957 he joined the Packard Bell Computer Corporation, a new division of the

electronics company Packard Bell.

In 1961, he and 11 colleagues from Packard Bell founded Scientific Data Systems

to build small and medium-size business computers, a market niche they believed

was being ignored by giants like I.B.M. The formula worked, and in 1969 Xerox

bought the company for $1 billion, with Mr. Palevsky taking home a 10 percent

share of the sale.

In 1968 he applied some of that money to financing a small start-up company in

Santa Clara to make semiconductors. It became Intel, today the world’s largest

producer of computer chips.

A staunch liberal, Mr. Palevsky first ventured into electoral politics in the

1960s when he became involved in the journalist Tom Braden’s race for lieutenant

governor of California and Robert F. Kennedy’s campaign for the presidency.

Mr. Palevsky pursued politics with zeal and whopping contributions of money. He

bet heavily on Senator George McGovern — who first began running for the

presidency in 1972 — donating more than $300,000 to a campaign that barely

existed.

His financial support and organizing work for Tom Bradley, a Los Angeles city

councilman, propelled Mr. Bradley, in 1973, to the first of his five terms as

mayor. During the campaign, Mr. Palevsky recruited Gray Davis as Mr. Bradley’s

chief fund-raiser, opening the door to a political career for Mr. Davis that

later led to the governorship.

Mr. Palevsky later became disenchanted with the power of money in the American

political system and adopted campaign finance reform as his pet issue.

Overcoming his lifelong aversion to Republican candidates, he raised money for

Senator John McCain of Arizona, an advocate of campaign finance reform, during

the 2000 presidential primary. Mr. Palevsky also became a leading supporter of

the conservative-backed Proposition 25, a state ballot initiative in 2000 that

would limit campaign contributions by individuals and ban contributions by

corporations.

Mr. Palevsky donated $1 million to the Proposition 25 campaign, his largest

political contribution ever. “I am making this million-dollar contribution in

hopes that I will never again legally be allowed to write huge checks to

California political candidates,” he told Newsweek.

His support put him in direct conflict with Governor Davis, the state Democratic

Party and labor unions, whose combined efforts to rally voter support ended in

the measure’s defeat.

Max Palevsky was born on July 24, 1924, in Chicago. His father, a house painter

who had immigrated from Russia, struggled during the Depression, and Mr.

Palevsky described his childhood as “disastrous.”

During World War II he served with the Army Air Corps doing electronics repair

work on airplanes in New Guinea. On returning home, he attended the University

of Chicago on the G.I. Bill, earning bachelor’s degrees in mathematics and

philosophy in 1948. He went on to do graduate work in mathematics and philosophy

at the University of Chicago, the University of California, Berkeley, and

U.C.L.A.

Money allowed him to indulge his interests. He collected Modernist art, but in

the early 1970s, while strolling through SoHo in Manhattan, he became fixated on

a desk by the Arts and Crafts designer Gustav Stickley. Mr. Palevsky amassed an

important collection of Arts and Crafts furniture and Japanese woodcuts, which

he donated to the Los Angeles County Museum of Art.

He also plunged into film production. He helped finance Terrence Malick’s

“Badlands” in 1973, and, with the former Paramount executive Peter Bart,

produced “Fun With Dick and Jane” in 2005, and, in 1977, “Islands in the

Stream.” In 1970 he rescued the foundering Rolling Stone magazine by buying a

substantial block of its stock.

Mr. Palevsky married and divorced five times. He is survived by a sister, Helen

Futterman of Los Angeles; a daughter, Madeleine Moskowitz of Los Angeles; four

sons: Nicholas, of Bangkok, Alexander and Jonathan, both of Los Angeles, and

Matthew, of Brooklyn; and four grandchildren.

Despite his groundbreaking work in the computer industry, Mr. Palevsky remained

skeptical about the cultural influence of computer technology. In a catalog

essay for an Arts and Crafts exhibition at the Los Angeles County Museum of Art

in 2005, he lamented “the hypnotic quality of computer games, the substitution

of a Google search for genuine inquiry, the instant messaging that has replaced

social discourse.”

He meant it too. “I don’t own a computer,” he told The Los Angeles Times in

2008. “I don’t own a cellphone, I don’t own any electronics. I do own a radio.”

Max Palevsky, a Pioneer

in Computers, Dies at 85, NYT, 6.5.2010,

http://www.nytimes.com/2010/05/07/us/07palevsky.html

H. Edward Roberts,

PC Pioneer,

Dies at 68

April 2, 2010

The New York Times

By STEVE LOHR

Not many people in the computer world remembered H. Edward Roberts, not after

he walked away from the industry more than three decades ago to become a country

doctor in Georgia. Bill Gates remembered him, though.

As Dr. Roberts lay dying last week in a hospital in Macon, Ga., suffering from

pneumonia, Mr. Gates flew down to be at his bedside.

Mr. Gates knew what many had forgotten: that Dr. Roberts had made an early and

enduring contribution to modern computing. He created the MITS Altair, the first

inexpensive general-purpose microcomputer, a device that could be programmed to

do all manner of tasks. For that achievement, some historians say Dr. Roberts

deserves to be recognized as the inventor of the personal computer.

For Mr. Gates, the connection to Dr. Roberts was also personal. It was writing

software for the MITS Altair that gave Mr. Gates, a student at Harvard at the

time, and his Microsoft partner, Paul G. Allen, their start. Later, they moved

to Albuquerque, where Dr. Roberts had set up shop.

Dr. Roberts died Thursday at the Medical Center of Middle Georgia, his son

Martin said. He was 68.

When the Altair was introduced in the mid-1970s, personal computers — then

called microcomputers — were mainly intriguing electronic gadgets for hobbyists,

the sort of people who tinkered with ham radio kits.

Dr. Roberts, it seems, was a classic hobbyist entrepreneur. He left his mark on

computing, built a nice little business, sold it and moved on — well before

personal computers moved into the mainstream of business and society.

Mr. Gates, as history proved, had far larger ambitions.

Over the years, there was some lingering animosity between the two men, and Dr.

Roberts pointedly kept his distance from industry events — like the 20th

anniversary celebration in Silicon Valley of the introduction of the I.B.M. PC

in 1981, which signaled the corporate endorsement of PCs.

But in recent months, after learning that Dr. Roberts was ill, Mr. Gates made a

point of reaching out to his former boss and customer. Mr. Gates sent Dr.

Roberts a letter last December and followed up with phone calls, another son,

Dr. John David Roberts, said. Eight days ago, Mr. Gates visited the elder Dr.

Roberts at his bedside in Macon.

“Any past problems between those two were long since forgotten,” said Dr. John

David Roberts, who had accompanied Mr. Gates to the hospital. He added that Mr.

Allen, the other Microsoft founder, had also called the elder Dr. Roberts

frequently in recent months.

On his Web site, Mr. Gates and Mr. Allen posted a joint statement, saying they

were saddened by the death of “our friend and early mentor.”

“Ed was willing to take a chance on us — two young guys interested in computers

long before they were commonplace — and we have always been grateful to him,”

the statement said.

When the small MITS Altair appeared on the January 1975 cover of Popular

Electronics, Mr. Gates and Mr. Allen plunged into writing a version of the Basic

programming language that could run on the machine.

Mr. Gates dropped out of Harvard, and Mr. Allen left his job at Honeywell in

Boston. The product they created for Dr. Roberts’s machine, Microsoft Basic, was

the beginning of what would become the world’s largest software company and

would make its founders billionaires many times over.

MITS was the kingpin of the fledgling personal computer business only briefly.

In 1977, Mr. Roberts sold his company. He walked away a millionaire. But as a

part of the sale, he agreed not to design computers for five years, an eternity

in computing. It was a condition that Mr. Roberts, looking for a change,

accepted.

He first invested in farmland in Georgia. After a few years, he switched course

and decided to revive a childhood dream of becoming a physician, earning his

medical degree in 1986 from Mercer University in Macon. He became a general

practitioner in Cochran, 35 miles northwest of the university.

In Albuquerque, Dr. Roberts, a burly, 6-foot-4 former Air Force officer, often

clashed with Mr. Gates, the skinny college dropout. Mr. Gates was “a very bright

kid, but he was a constant headache at MITS,” Dr. Roberts said in an interview

with The New York Times at his office in 2001.

“You couldn’t reason with him,” he added. “He did things his way or not at all.”

His former MITS colleagues recalled that Dr. Roberts could be hardheaded as

well. “Unlike the rest of us, Bill never backed down from Ed Roberts face to

face,” David Bunnell, a former MITS employee, said in 2001. “When they

disagreed, sparks flew.”

Over the years, people have credited others with inventing the personal

computer, including the Xerox Palo Alto Research Center, Apple and I.B.M. But

Paul E. Ceruzzi, a technology historian at the Smithsonian Institution, wrote in

“ History of Modern Computing” (MIT Press, 1998) that “H. Edward Roberts, the

Altair’s designer, deserves credit as the inventor of the personal computer.”

Mr. Ceruzzi noted the “utter improbability and unpredictability” of having one

of the most significant inventions of the 20th century come to life from such a

seemingly obscure origin. “But Albuquerque it was,” Mr. Ceruzzi wrote, “for it

was only at MITS that the technical and social components of personal computing

converged.”

H. Edward Roberts was born in Miami on Sept. 13, 1941. His father, Henry Melvin

Roberts, ran a household appliance repair service, and his mother, Edna Wilcher

Roberts, was a nurse. As a young man, he wanted to be a doctor and, in fact,

became intrigued by electronics working with doctors at the University of Miami

who were doing experimental heart surgery. He built the electronics for a

heart-lung machine. “That’s how I got into it,” Dr. Roberts recalled in 2001.

So he abandoned his intended field and majored in electrical engineering at

Oklahoma State University. Then, he worked on a room-size I.B.M. computer. But

the power of computing, Dr. Roberts recalled, “opened up a whole new world. And

I began thinking, What if you gave everyone a computer?”

In addition to his sons Martin, of Glenwood, Ga., and John David, of Eastman,

Ga., Dr. Roberts is survived by his mother, Edna Wilcher Roberts, of Dublin,

Ga., his wife, Rosa Roberts of Cochran; his sons Edward, of Atlanta, and Melvin

and Clark, both of Athens, Ga.; his daughter, Dawn Roberts, of Warner Robins,

Ga.; three grandchildren and one great-grandchild.

His previous two marriages, to Donna Mauldin Roberts and Joan C. Roberts, ended

in divorce.

His sons said Dr. Roberts never gave up his love for making things, for

tinkering and invention. He was an accomplished woodworker, making furniture for

his household, family and friends. He made a Star Wars-style light saber for a

neighbor’s son, using light-emitting diodes. And several years ago he designed

his own electronic medical records software, though he never tried to market it,

his son Dr. Roberts said.

“Once he figured something out,” he added, “he was on to the next thing.”

H. Edward Roberts, PC

Pioneer, Dies at 68, NYT, 2.5.2010,

http://www.nytimes.com/2010/04/03/business/03roberts.html

Robert Spinrad,

a Pioneer in Computing,

Dies at 77

September 7, 2009

The New York Times

By JOHN MARKOFF

Robert J. Spinrad, a computer designer who carried out pioneering

work in scientific automation at Brookhaven National Laboratory and who later

was director of Xerox’s Palo Alto Research Center while the personal computing

technology invented there in the 1970s was commercialized, died on Wednesday in

Palo Alto, Calif. He was 77.

The cause was Lou Gehrig’s disease, his wife, Verna, said.

Trained in electrical engineering before computer science was a widely taught

discipline, Dr. Spinrad built his own computer from discarded telephone

switching equipment while he was a student at Columbia.

He said that while he was proud of his creation, at the time most people had no

interest in the machines. “I may as well have been talking about the study of

Kwakiutl Indians, for all my friends knew,” he told a reporter for The New York

Times in 1983.

At Brookhaven he would design a room-size, tube-based computer he named Merlin,

as part of an early generation of computer systems used to automate scientific

experimentation. He referred to the machine, which was built before transistors

were widely used in computers, as “the last of the dinosaurs.”

After arriving at Brookhaven, Dr. Spinrad spent a summer at Los Alamos National

Laboratories, where he learned about scientific computer design by studying an

early machine known as Maniac, designed by Nicholas Metropolis, a physicist. Dr.

Spinrad’s group at Brookhaven developed techniques for using computers to run

experiments and to analyze and display data as well as to control experiments

interactively in response to earlier measurements.

Later, while serving as the head of the Computer Systems Group at Brookhaven,

Dr. Spinrad wrote a cover article on laboratory automation for the Oct. 6, 1967,

issue of Science magazine.

“He was really the father of modern laboratory automation,” said Joel Birnbaum,

a physicist who designed computers at both I.B.M. and Hewlett-Packard. “He had a

lot of great ideas about how you connected computers to instruments. He realized

that it wasn’t enough to just build a loop between the computer and the

apparatus, but that the most important piece of the apparatus was the

scientist.”

After leaving Brookhaven, Dr. Spinrad joined Scientific Data Systems in Los

Angeles as a computer designer and manager. When the company was bought by the

Xerox Corporation in an effort to compete with I.B.M., he participated in

Xerox’s decision to put a research laboratory next to the campus of Stanford.

Xerox’s Palo Alto Research Center pioneered the technology that led directly to

the modern personal computer and office data networks.

Taking over as director of the laboratory in 1978, Dr. Spinrad oversaw a period

when the laboratory’s technology was commercialized, including the first modern

personal computer, the ethernet local area network and the laser printer.

However, as a copier company, Xerox was never a comfortable fit for the emerging

computing world, and many of the laboratory researchers left Xerox, often taking

their innovations with them.

At the center, Dr. Spinrad became adept at bridging the cultural gulf between

the lab’s button-down East Coast corporations and its unruly and innovative West

Coast researchers.

Robert Spinrad was born in Manhattan on March 20, 1932. He received an

undergraduate degree in electrical engineering from Columbia and a Ph.D. from

the Massachusetts Institute of Technology.

In addition to his wife, Verna, he is survived by two children, Paul, of San

Francisco, and Susan Spinrad Esterly, of Palo Alto, and three grandchildren.

Flying between Norwalk, Conn., and Palo Alto frequently, Dr. Spinrad once

recalled how he felt like Superman in reverse because he would invariably step

into the airplane’s lavatory to change into a suit for his visit to the company

headquarters.

Robert Spinrad, a

Pioneer in Computing, Dies at 77, NYT, 7.9.2009,

http://www.nytimes.com/2009/09/07/technology/07spinrad.html

Intel Adopts

an Identity in Software

May 25, 2009

The New York Times

By ASHLEE VANCE

SANTA CLARA, Calif. — Intel has worked hard and spent a lot of

money over the years to shape its image: It is the company that celebrates its

quest to make computer chips ever smaller, faster and cheaper with a quick

five-note jingle at the end of its commercials.

But as Intel tries to expand beyond the personal computer chip business, it is

changing in subtle ways. For the first time, its long unheralded software

developers, more than 3,000 of them, have stolen some of the spotlight from its

hardware engineers. These programmers find themselves at the center of Intel’s

forays into areas like mobile phones and video games.

The most attention-grabbing element of Intel’s software push is a version of the

open-source Linux operating system called Moblin. It represents a direct assault

on the Windows franchise of Microsoft, Intel’s longtime partner.

“This is a very determined, risky effort on Intel’s part,” said Mark

Shuttleworth, the chief executive of Canonical, which makes another version of

Linux called Ubuntu.

The Moblin software resembles Windows or Apple’s Mac OS X to a degree, handling

the basic functions of running a computer. But it has a few twists as well that

Intel says make it better suited for small mobile devices.

For example, Moblin fires up and reaches the Internet in about seven seconds,

then displays a novel type of start-up screen. People will find their

appointments listed on one side of the screen, along with their favorite

programs. But the bulk of the screen is taken up by cartoonish icons that show

things like social networking updates from friends, photos and recently used

documents.

With animated icons and other quirky bits and pieces, Moblin looks like a fresh

take on the operating system. Some companies hope it will give Microsoft a

strong challenge in the market for the small, cheap laptops commonly known as

netbooks. A polished second version of the software, which is in trials, should

start appearing on a variety of netbooks this summer.

“We really view this as an opportunity and a game changer,” said Ronald W.

Hovsepian, the chief executive of Novell, which plans to offer a customized

version on Moblin to computer makers. Novell views Moblin as a way to extend its

business selling software and services related to Linux.

While Moblin fits netbooks well today, it was built with smartphones in mind.

Those smartphones explain why Intel was willing to needle Microsoft.

Intel has previously tried and failed to carve out a prominent stake in the

market for chips used in smaller computing devices like phones. But the company

says one of its newer chips, called Atom, will solve this riddle and help it

compete against the likes of Texas Instruments and Qualcomm.

The low-power, low-cost Atom chip sits inside most of the netbooks sold today,

and smartphones using the chip could start arriving in the next couple of years.

To make Atom a success, Intel plans to use software for leverage. Its needs

Moblin because most of the cellphone software available today runs on chips

whose architecture is different from Atom’s. To make Atom a worthwhile choice

for phone makers, there must be a supply of good software that runs on it.

“The smartphone is certainly the end goal,” said Doug Fisher, a vice president

in Intel’s software group. “It’s absolutely critical for the success of this

product.”

Though large, Intel’s software group has remained out of the spotlight for

years. Intel considers its software work a silent helping hand for computer

makers.

Mostly, the group sells tools that help other software developers take advantage

of features in Intel’s chips. It also offers free consulting services to help

large companies wring the most performance out of their code, in a bid to sell

more chips.

Renee J. James, Intel’s vice president in charge of software, explained, “You

can’t just throw hardware out there into the world.”

Intel declines to disclose its revenue from these tools, but it is a tiny

fraction of the close to $40 billion in sales Intel racks up every year.

Still, the software group is one of the largest at Intel and one of the largest

such organizations at any company.

In the last few years, Intel’s investment in Linux, the main rival to Windows,

has increased. Intel has hired some of the top Linux developers, including Alan

Cox from Red Hat, the leading Linux seller, last year. Intel pays these

developers to improve Linux as a whole and to further the company’s own projects

like Moblin.

“Intel definitely ranks pretty highly when it comes to meaningful

contributions,” Linus Torvalds, who created the core of Linux and maintains the

software, wrote in an e-mail message. “They went from apparently not having much

of a strategy at all to having a rather wide team.”

Intel has also bought software companies. Last year, it acquired OpenedHand, a

company whose work has turned into the base of the new Moblin user interface.

It has also bought a handful of software companies with expertise in gaming and

graphics technology. Such software is meant to create a foundation to support

Intel’s release of new high-powered graphics chips next year. Intel hopes the

graphics products will let it compete better against Nvidia and Advanced Micro

Devices and open up another new business.

Intel tries to play down its competition with Microsoft. Since Moblin is open

source, anyone can pick it up and use it. Companies like Novell will be the ones

actually offering the software to PC makers, while Intel will stay in the

background. Still, Ms. James says that Intel’s relationship with Microsoft has

turned more prickly.

“It is not without its tense days,” she said.

Microsoft says Intel faces serious hurdles as it tries to stake a claim in the

operating system market.

“I think it will introduce some challenges for them just based on our experience

of having built operating systems for 25 years or so,” said James DeBragga, the

general manager of Microsoft’s Windows consumer team.

While Linux started out as a popular choice on netbooks, Microsoft now dominates

the market. Microsoft doubts whether something like Moblin’s glossy interface

will be enough to woo consumers who are used to Windows.

Intel says people are ready for something new on mobile devices, which are

geared more to the Internet than to running desktop-style programs.

“I am a risk taker,” Ms. James of Intel said. “I have that outlook that if

there’s a possibility of doing something different, we should explore trying

it.”

Intel Adopts an

Identity in Software, NYT, 25.5.2009,

http://www.nytimes.com/2009/05/25/technology/business-computing/25soft.html

I.B.M. Unveils Real-Time Software

to Find Trends in Vast Data

Sets

May 21, 2009

The New York Times

By ASHLEE VANCE

New software from I.B.M. can suck up huge volumes of data from many sources

and quickly identify correlations within it. The company says it expects the

software to be useful in analyzing finance, health care and even space weather.

Bo Thidé, a scientist at the Swedish Institute of Space Physics, has been

testing an early version of the software as he studies the ways in which things

like gas clouds and particles cast off by the sun can disrupt communications

networks on Earth. The new software, which I.B.M. calls stream processing, makes

it possible for Mr. Thidé and his team of researchers to gather and analyze vast

amounts of information at a record pace.

“For us, there is no chance in the world that you can think about storing data

and analyzing it tomorrow,” Mr. Thidé said. “There is no tomorrow. We need a

smart system that can give you hints about what is happening out there right

now.”

I.B.M., based in Armonk, N.Y., spent close to six years working on the software

and has just moved to start selling a product based on it called System S. The

company expects it to encourage breakthroughs in fields like finance and city

management by helping people better understand patterns in data.

Steven A. Mills, I.B.M.’s senior vice president for software, notes that

financial companies have spent years trying to gain trading edges by sorting

through various sets of information. “The challenge in that industry has not

been ‘Could you collect all the data?’ but ‘Could you collect it all together

and analyze it in real time?’ ” Mr. Mills said.

To that end, the new software harnesses advances in computing and networking

horsepower in a fashion that analysts and customers describe as unprecedented.

Instead of creating separate large databases to track things like currency

movements, stock trading patterns and housing data, the System S software can

meld all of that information together. In addition, it could theoretically then

layer on databases that tracked current events, like news headlines on the

Internet or weather fluctuations, to try to gauge how such factors interplay

with the financial data.

Most computers, of course, can digest large stores of information if given

enough time. But I.B.M. has succeeded in performing very quick analyses on

larger hunks of combined data than most companies are used to handling.

“It’s that combination of size and speed that had yet to be solved,” said Gordon

Haff, an analyst at Illuminata, a technology industry research firm.

Conveniently for I.B.M., the System S software matured in time to match up with

the company’s “Smarter Planet” campaign. I.B.M. has flooded the airwaves with

commercials about using technology to run things like power grids and hospitals

more efficiently.

The company suggests, for example, that a hospital could tap the System S

technology to monitor not only individual patients but also entire patient

databases, as well as medication and diagnostics systems. If all goes according

to plan, the computing systems could alert nurses and doctors to emerging

problems.

Analysts say the technology could also provide companies with a new edge as they

grapple with doing business on a global scale.

“With globalization, more and more markets are heading closer to perfect

competition models,” said Dan Olds, an analyst with Gabriel Consulting. “This

means that companies have to get smarter about how they use their data and find

previously unseen opportunities.”

Buying such an advantage from I.B.M. has its price. The company will charge at

least hundreds of thousands of dollars for the software, Mr. Mills said.

I.B.M. Unveils Real-Time

Software to Find Trends in Vast Data Sets,

NYT, 21.5.2009,

http://www.nytimes.com/2009/05/21/technology/

business-computing/21stream.html

Burned

Once,

Intel Prepares New Chip